#39: Agent-Oriented Programming: Building Secure, execution-ready AI Agent Systems.

Engineer, Scientist, Author: #FrontierAISecurity via #GenerativeAI, #Cybersecurity, #AgenticAI @AIwithKT.

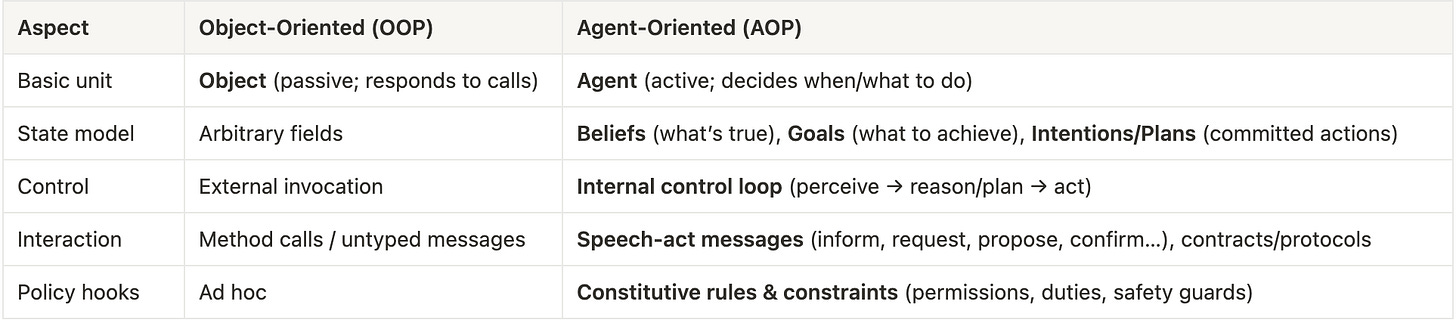

TL;DR - agent-oriented programming (AOP) treats software components as autonomous agents with beliefs, goals, and plans that perceive → decide → act → coordinate. Compared to OOP’s passive objects, AOP gives you first-class building blocks for multi-agent workflows, policy-constrained autonomy, and resilient orchestration. For security programs, AOP can be how you turn “agentic AI” into a governable, auditable, zero-trust-compatible system rather than a pile of brittle scripts.

This post covers:

What AOP is (and isn’t), with a clear contrast to OOP;

How to apply AOP to SOC automation, incident response, posture management, GRC;

A minimal agent loop in Python, plus pointers to production frameworks;

A reference security architecture, maturity plan, pitfalls, KPIs;

Curated, up-to-date resources at the end!

It builds on earlier

posts on the agentic security paradigm, orchestration layers and multi-agent design.Why AOP now (and why security cares)

Attackers are increasingly “agentic.”

Modern campaigns are starting to look less like one-off exploits and more like autonomous playbooks: scripts call cloud and SaaS APIs, query external intel, spin up LLMs to personalize phishing or summarize loot, and loop continuously until goals are met. Toolchains mix commodity loaders, headless browsers, API clients, and decision nodes that adapt to defender responses (rate limits, MFA prompts, geo-blocks). In cloud, we are seeing and will see more of iterative behaviors: enumerate → test policy edges → escalate → exfiltrate → persistence, with each step picking the next move based on feedback. If defenders keep shipping point automations - a webhook here, a Lambda there - our responses stay brittle and reactive. We need software components that can perceive state, reason about objectives, coordinate with peers, and change tactics - the behaviors AOP formalizes.

Enterprise adoption has crossed the threshold.

What used to be weekend research repos is now packaged: major vendors ship agent builders, multi-agent orchestration, tool adapters, long-running state, and event-driven triggers. Platforms expose guardrails, approvals, and audit hooks so agents can operate in real environments - ticketing, IdP, EDR, cloud, data lakes - without bespoke glue for each team. That matters for security because our stack is inherently heterogeneous; an approach that treats agents as first-class, composable services lets you introduce autonomy where it helps (think triage, enrichment, containment proposals) and keep humans where judgment is essential, and that too, without a rewrite of everything around them.

Governance pressure is rising.

Boards, auditors, and regulators now ask three questions on every autonomous action: who authorized it, under which policy, and where’s the evidence. Email threads and ad-hoc scripts won’t cut it when decisions are machine-driven and time-sensitive. Architecturally, this implies three non-negotiables:

Bounded autonomy - actions are classified by impact; high-impact intentions require approvals, simulations, or dual control.

Typed communications - agents exchange structured messages (“inform,” “request,” “propose,” “confirm”) that can be validated, versioned, and searched.

Attribution and replayability - every message and action carries identity, provenance, and a decision trace so you can reconstruct the “why” and replay scenarios for audit and improvement.

AOP gives us the vocabulary and the scaffolding.

Instead of wiring opaque prompt chains, AOP lets you design with roles, goals, beliefs, intentions, and protocols. Agents become accountable principals: they own objectives, speak well-typed “speech acts,” and operate under explicit constitutions (policies) enforced at runtime. The scaffolding - mailboxes/queues with identity, schema-validated messages, plan libraries with preconditions/rollbacks, guardrail services for approvals - maps one-to-one with security needs. You gain systems that are adaptive enough to keep up with attackers and governable enough to satisfy audit. In short: AOP aligns autonomy with assurance, so you can scale agentic capabilities without sacrificing control.

AOP in one page (vs. OOP)

Think of agents as secure microservices with a brain and a radio. They own their goals, talk to peers through typed messages, and can be reasoned about at the level of intent and obligation, not just function signatures.

Minimal agent loop (Python)

You don’t need a heavy framework to start thinking in AOP. This stripped-down loop shows the core:

from typing import Any, Dict, List

class Agent:

def __init__(self, name: str, goals: List[str]):

self.name = name

self.beliefs: Dict[str, Any] = {}

self.goals = goals

self.intentions: List[Dict] = [] # planned actions

def perceive(self, events: List[Dict]):

for e in events:

self.beliefs[e["key"]] = e["value"]

def deliberate(self):

# trivial policy: if suspicious_auth and goal "maintain_integrity" then plan a response

if self.beliefs.get("suspicious_auth") and "maintain_integrity" in self.goals:

self.intentions.append({"type": "request", "to": "ResponseAgent",

"action": "isolate_identity", "evidence": self.beliefs["suspicious_auth"]})

def act(self, send):

while self.intentions:

msg = self.intentions.pop(0)

send(msg) # typed message to another agent

# Example "mailbox" send function would enforce ACLs, schemas, and audit logging.Production systems add…

Typed messages (schemas & protocols), retries, and delivery guarantees;

Policy checks (who can request/approve which actions);

Plans with preconditions/effects, rollbacks, and compensations;

Memory (episodic logs, tool outcomes) and tooling (search, RAG, device control);

Observability (per-message lineage, decision traces).

Where AOP lands in the enterprise

Common agent roles (start here and expand):

Sensor/Collector Agents –> stream logs, cloud events, SaaS signals; normalize + enrich.

Triager/Analyst Agents –> correlate alerts, prioritize, draft investigations; ask peers for data.

Responder/Remediator Agents –> propose and (with policy approval) execute playbook steps.

Posture/Compliance Agents –> continuously test controls, generate evidence, open tickets.

Orchestrator/Coordinator Agents –> maintain the global plan; resolve conflicts; escalate.

Policy/Safety Agent –> gate high-impact actions; enforce SoD (separation of duties), rate limits, and guardrails.

High-value use cases (90-120 days): alert triage, phishing response, IAM hygiene, misconfig drift detection, SaaS posture fixes, vendor evidence collection, and control testing. Each maps cleanly onto agent roles and messages.

A secure reference architecture (overview)

Agent Runtime Layer

Long-running workers with durable state; per-agent identity (mTLS/JWT), stable mailboxes/queues

Typed messages (e.g., FIPA-style speech acts) with schemas in your repo; strict versioning

Policy and Safety Layer

Constitutions: org-level rules (who may propose/approve/execute which action classes)

Action gates: human-in-the-loop for high-impact intents; auto-approval for low-risk

Sandboxes & scopes: least-privilege tokens, constrained tool adapters, egress filters

Tooling and Knowledge Layer

Connectors (ticketing, EDR, IdP, cloud, SaaS), search/RAG with red-teamed corpora, rate-limited APIs

Observability and Assurance

Per-message lineage (who said what to whom, when, under which policy)

Decision traces (inputs → plan → action → outcome), replay harnesses, chaos tests for autonomy

Orchestration and Governance

Orchestrator agent(s) coordinating plans, deadlines, and resource allocation

GRC integration: map agent actions to controls (e.g., NIST 800-53, ISO 27001) and produce evidence

Adoption without the theatre

You don’t need a 200-page blueprint. Pick a single, bounded workflow (e.g., “impossible-travel” alerts). Model three roles - Sensor → Analyst → Responder - and the two or three messages they must exchange. Encode those messages as schemas; give each agent an identity; log every message and decision. Put a policy agent in front of any action that writes to production systems.

When that first flow is stable, generalize: extract the messages into a shared protocol package; turn playbook steps into reusable “capabilities” (quarantine identity, revoke token, snapshot VM). Add an orchestrator to coordinate multiple cases and deadlines. Expand connectors slowly; keep adapters least-privileged and versioned. This is how you move from “demo” to “platform” without the big-bang rewrite.

How do you know it’s actually working? I mean, first, skip the flashy counts.

Track four things:

(1) how accurate the automated decisions are,

(2) how much faster you acknowledge and contain issues versus your baseline,

(3) what percentage of actions ran under the right policy with full evidence attached, and

(4) how often humans had to redo or roll back an agent’s work. If those trends aren’t improving, you’re likely running a scripted assistant - not yet a governed agent system.

Common failure modes -> and the antidote

1) Prompt chains posing as agents

What goes wrong: you have clever LLM prompts glued together, but no persistent state, no typed messages, and no way to explain why a step happened. It “chats,” it doesn’t reason.

Antidote: model agents with explicit beliefs, goals, and intentions; persist state between steps; define message schemas (e.g., inform/request/propose with JSON contracts) and validate them at the boundary. Treat conversations as APIs with inputs/outputs and policies, not transcripts. A quick test: can you replay the same inputs and get the same plan under policy?

2) Free-roaming autonomy

What goes wrong: an agent can execute high-impact actions (disable SSO, quarantine production hosts) without the right gates. That’s how “assistants” become incident sources.

Antidote: classify action impact tiers (read, propose, execute, destructive). Require approvals, simulation, or dual control (SoD) for high-impact intentions. Enforce rate limits and scopes on credentials. Add a circuit breaker that can pause whole classes of actions system-wide and default to safe rollback plans.

3) Opaque decisions

What goes wrong: an outcome appears (“user disabled”), but no one can reconstruct who authorized it, under which policy, or based on what evidence. You can’t pass audit or do a fair post-mortem.

Antidote: persist decision traces by default: inputs → plan/intent → policy checks → action → outcome. Stamp each message with identity, timestamps, and policy version. Reject or sandbox any action that lacks provenance. Make traces queryable and replayable for audit and continuous testing.

4) Tool sprawl

What goes wrong: every agent talks directly to every tool (EDR, IdP, cloud, SaaS). Permissions balloon, integrations drift, and a minor API change breaks half the system.

Antidote: put tools behind stable, least-privilege adapters with strict schemas, quotas, and contract tests. Version adapters like libraries, rotate secrets centrally, and change the adapter - not each agent. Keep an “adapter bill of materials” and a deprecation plan so you can swap vendors without rewiring behavior.

Bottom line: Treat these as architectural hygiene, not afterthoughts. If you encode state and protocols, bound autonomy, make decisions explainable, and tame integrations behind adapters, you’ll have agents that are both useful and governable.

How this extends the series

In Agentic AI and the New Security Paradigm, I argue that threats are orchestrated - and defenses must be, too. AOP is the engineering discipline that makes that orchestration governable. Beyond the Agent Iceberg surfaced the hidden mass - tools, policy, memory, safety - and AOP gives each an explicit home in the design. Understanding Multi-Agent Systems laid out the why; AOP is the how. And From Unix Pipes to AI Agents likened function pipes to inter-agent flows; AOP turns those flows into typed, auditable protocols that scale and stand up to scrutiny.

The vision

The long-term win isn’t novelty; it’s reliable autonomy. The goal is a system that behaves predictably under pressure, explains itself when asked, and improves without risking the estate. With AOP, you move from a clever single-loop triage to a portfolio of agents that negotiate resources, share context, and coordinate plans - governed by policies that are explicit, testable, and enforced at runtime.

At scale, each agent is treated like a product with clear obligations and SLOs: what it can do, what it must never do, how it proves compliance, and how it fails safe. Decisions carry identity, policy versions, and evidence so they’re auditable and replayable. Actions are bounded by impact tiers, gated when risk is high, and backed by rollbacks. Change control looks like software engineering, not heroics: capabilities are versioned, simulated in sandboxes, and rolled out behind feature flags - with a circuit breaker to pause or revoke autonomy instantly.

Humans stay in the loop where judgment matters - approvals for high-impact intentions, escalation paths when signals conflict, red-team pressure to harden plans - while the agents clear the noise, keep posture tight, and maintain the evidence trail your auditors expect. Over time, feedback from incidents and reviews tunes goals, plans, and guardrails so the fleet gets sharper without getting looser.

The payoff is operational and strategic: faster acknowledgment and containment, lower toil, fewer policy escapes, cleaner audits, and a platform you can extend without rewiring. That’s autonomy we can govern -> a future your engineers can ship, your CISO can approve and your auditors can trust.

Innovating with integrity,

@AIwithKT 🤖🧠