#30: How to Build an Agentic AI System from Scratch [7-min read].

Exploring #FrontierAISecurity via #GenerativeAI, #Cybersecurity, #AgenticAI @AIwithKT.

“Some people call this artificial intelligence, but the reality is this technology will enhance us. So instead of artificial intelligence, I think we’ll augment our intelligence.” — Ginni Rometty

The emergence of Agentic AI (systems capable of independently perceiving, reasoning, acting, and continuously learning) is radically reshaping automation, decision-making, and human-machine interaction across diverse industries. Unlike traditional artificial intelligence, which typically executes predefined, structured tasks and remains limited to narrow scopes, agentic AI systems operate with a higher degree of autonomy, adapt dynamically to their environments, and learn from their own experiences in real-time.

This transition from static automation to autonomous intelligence marks a profound shift. Agentic AI doesn't just respond to prompts; it proactively anticipates needs, makes complex decisions, and evolves through self-directed learning. Consider a cybersecurity agent autonomously defending networks by analyzing emerging threats and deploying real-time countermeasures, or a financial AI dynamically adjusting trading strategies as market conditions shift. In logistics, autonomous agents could instantly reroute supply chains in response to disruptions, optimizing operations beyond human capability.

Yet, this transformative power brings complexity. Building a reliable agentic AI system demands careful attention to design choices, ethical frameworks, transparency mechanisms, and robust guardrails. As these systems increasingly influence human outcomes, we must thoughtfully balance innovation with accountability, and autonomy with ethical oversight.

We’ll walk through a structured approach to building an agentic AI system from the ground up: covering the foundational building blocks, architectural choices, and essential safety considerations.

Let’s dive in!

1. Define Purpose and Scope 📝

Before any technical design, establish why your agent exists and how it will operate:

Problem Statement

Craft a concise mission: e.g., "Autonomously detect and respond to common cybersecurity threats to protect organizational IT systems."

Enumerate success metrics (e.g., mean time to detect, false‑positive rates, automated patch rates).

Autonomy Spectrum

Advisory Mode: Agent suggests actions; human approves.

Supervised Autonomy: Agent executes routine tasks; escalates exceptions.

Full Autonomy: Agent acts independently within predefined safety bounds.

Ethical & Safety Constraints

List potential harms: unauthorized data access, over‑blocking legitimate traffic, errant trades.

Define guardrails:

Maximum transaction size for financial bots

Data privacy boundaries for health‑care agents

Human‑override triggers when confidence scores fall below threshold

By clarifying these parameters up front, you align stakeholder expectations, reduce scope creep, and embed ethical intent into your development process.

2. Establish Core Building Blocks 🧱

A robust agentic AI comprises four foundational layers, each demanding thoughtful design and technology choices:

2.1 Perception Layer (Sensing the Environment)

Multimodal Data Sources

Network telemetry, system logs, and user interaction streams for cybersecurity agents

Market feeds, financial statements, and social sentiment for trading bots

Traffic GPS, weather APIs, and warehouse sensors for logistics coordinators

Preprocessing Pipelines

Normalization & Cleaning: Standardize formats (timestamps, IP formats), remove duplicates

Feature Extraction: Derive meaningful signals (e.g., packet metadata, indicator frequency)

Streaming Architecture: Tools like Apache Kafka or AWS Kinesis to ensure low‑latency ingestion

Best Practice: Implement a schema registry (e.g., Confluent Schema Registry) to guarantee consistent data formats across modules.

2.2 Reasoning Engine (Deciding What to Do)

Large Language Models (LLMs)

Fine‑tune GPT‑4 or LLaMA for domain‑specific reasoning (e.g., interpreting security advisories, parsing legal documents)

Integrate through frameworks like LangChain or LlamaIndex for prompt orchestration

Symbolic/Logic Solvers

Use Prolog‑style rule engines or SMT solvers (e.g., Z3) for constraint validation and policy enforcement

Hierarchical Task Planning (HTP)

Break high‑level goals into subtasks using planners like PDDL-based systems or custom DAGs

Memory & Context Modules

Employ vector databases (e.g., Pinecone, Weaviate) to store past interactions, allowing the agent to recall prior decisions and outcomes

Best Practice: Design a hybrid architecture — LLM for flexible reasoning, symbolic solver for strict policy checks — to balance creativity and compliance.

2.3 Action Layer (Executing Decisions)

API Integrations & Webhooks

Securely connect to external systems: CI/CD pipelines, cloud management APIs, SIEM/SOAR platforms

Automation Modules

Scripted workflows (e.g., Terraform for infra changes, Ansible for patching)

Transactional wrappers to ensure atomicity and rollback on failure

Human‑AI Interfaces

Build lightweight dashboards (Streamlit, React) or chatbots (Rasa, Microsoft Bot Framework) to surface decision rationales and allow manual overrides

Best Practice: Implement circuit‑breakers: automatic pauses in autonomous flows upon detecting anomalous system states or low confidence.

2.4 Learning Module (Continuous Evolution)

Reinforcement Learning (RL)

Define reward functions aligned with business KPIs (e.g., minimized incident response time, maximized throughput)

Use libraries such as Ray RLlib or Stable Baselines3 for scalable training

Self‑Correction & Retraining

Periodically retrain models on post‑mortem incident data to incorporate novel threat patterns or market behaviors

A/B Testing & Shadow Modes

Validate new policies in “shadow” deployments to compare against human‑led baselines without impacting production

Best Practice: Maintain a continuous evaluation pipeline (MLflow, Weights & Biases) to track model drift, performance regressions, and data schema changes.

3. Architecting for Scale 📈

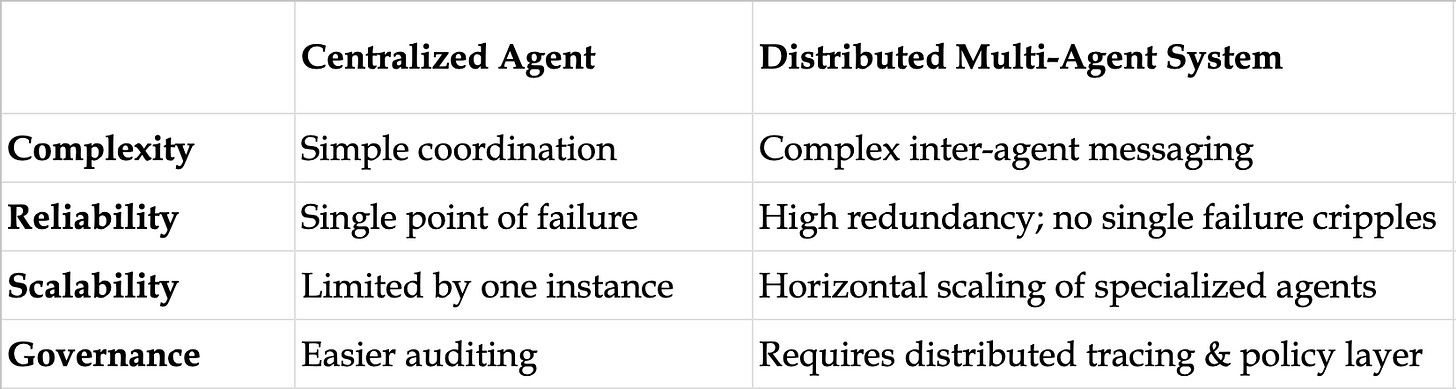

Decide whether your agentic AI will be centralized or distributed: each has distinct trade‑offs:

When to Choose:

Centralized for narrow, high‑risk domains (medical diagnostics, legal compliance)

Distributed for complex ecosystems (enterprise security, global supply chains)

4. Implement Guardrails for Safety & Interpretability 🦺

Embedding trust begins with clear governance frameworks and transparency mechanisms:

4.1 Explainability & Transparency

Feature Attribution: SHAP or LIME to highlight which inputs drove decisions

Reasoning Trace Logs: Structured “chain‑of‑thought” records that document each inference step

User Reports: Auto-generated summaries in natural language that explain why an action was taken

4.2 Risk Mitigation & Redundancy

Fail‑Safe Defaults: Roll back to known‑good states when confidence < threshold

Meta‑Monitoring Agents: Overwatch processes that flag anomalous behavior for human review

Fairness & Bias Audits: Tools like Fairlearn to detect and correct skew across demographic or operational slices

4.3 Ethical Alignment & Compliance

Hard Constraints: Embedded policy rules (e.g., GDPR data‑handling restrictions) enforced via logic solvers

Safety Thresholds: Human‑in‑the‑loop gates when decisions carry significant impact (financial trades, life‑critical systems)

Continuous Auditing: Scheduled third‑party reviews, red‑team exercises, and compliance checks

Best Practice: Adopt the IEEE 7000™ series standards or NIST AI Risk Management Framework to guide ethical and regulatory alignment.

The Path to Responsible Agentic AI 🤖

Building an agentic AI system from scratch isn’t just a technical endeavor: it’s a commitment to shaping how autonomous technologies integrate with society. As these agents take on ever‑greater responsibilities in healthcare, finance, cybersecurity, and beyond, we must:

Define Shared Benchmarks: Establish industry‑wide metrics for fairness, reliability, and interpretability.

Institutionalize Ethical Review: Create multidisciplinary panels (engineers, ethicists, domain experts) to vet designs and monitor deployments.

Cultivate Human‑AI Collaboration: Balance autonomy with oversight, that is, design agents not to replace human judgment but to augment it.

At each stage, from scoping the problem to deploying guardrails, ask yourself: “Are we building agents that serve human values, or merely automating old patterns faster?” The choices you make today will determine whether agentic AI becomes a force for innovation guided by responsibility, or a technology divorced from the ethical foundations it must uphold.

Share newsletter here:

Innovating with integrity,

@AIwithKT 🤖🧠